Evan Shapiro, the Media Cartographer, says that “Traditional, Streaming, and Creators” no longer nearly suffice as descriptors for what’s happening in Media today.

He says the “Affinity Economy” has supplanted that:

“It’s a complex, multi-layered, infinitely-fragmented biosphere that generates value from engagement and passion rather than reach and frequency.”

And it’s driven by demographics (and corresponding technological behaviour).

Evan points out that Boomers and Gen X account for 29% of the global population whereas Gen Z and Gen Alpha total 48%…

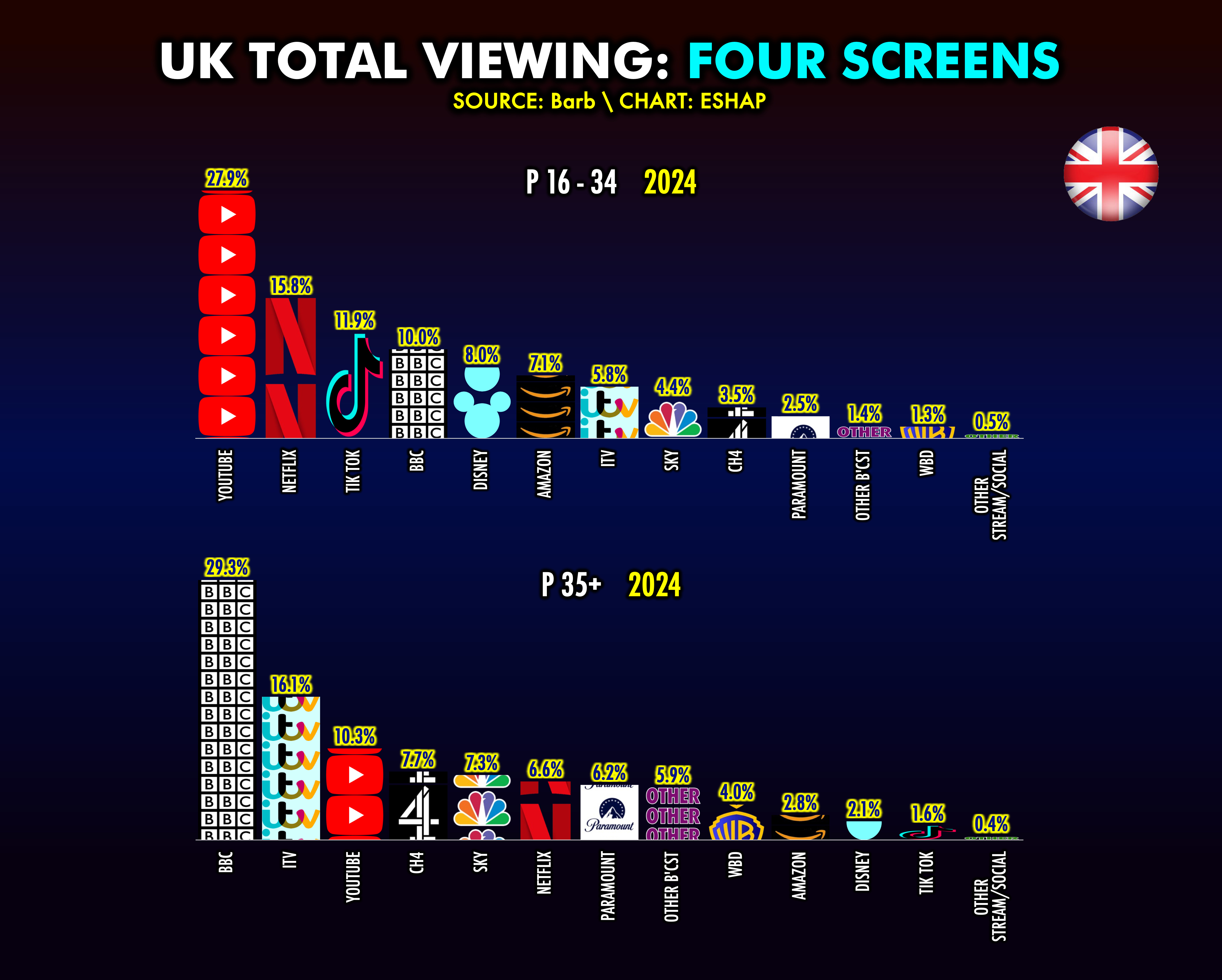

…which matters because younger people consume online media, whereas older folks watch legacy media. (Digital Natives will become the majority before 2035.)

He propounds that Broadcast Media should not live in the past and to remain relevant needs to “entertain and inform audiences (and fans) of all ages and generations — Boomers on The Telly, Gen Z on The YouTube, and Gen A on The TikTok.”

My take: this is kinda obvious — but nice to see someone on the inside shake the cage. I’m actually surprised that thirty years in, Big Business hasn’t locked down the Internet yet. Anyone can post to YouTube and TikTok — for now..