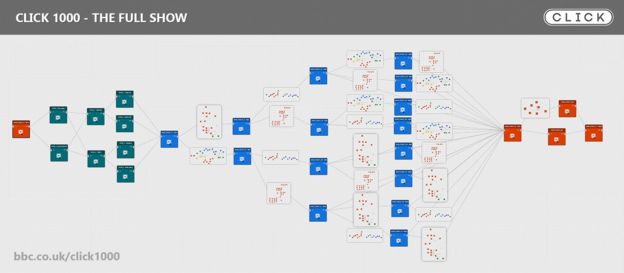

This week the BBC celebrated the 1000th episode of their technology magazine show Click with an interactive issue.

Access the show and get prepared to click!

One of the pieces that caught my eye was an item in the Tech News section about interactive art, called Mechanical Masterpieces by artist Neil Mendoza.

The exhibit is a mashup of digitized high art and Rube Goldberg-esque analogue controls that let the participants prod and poke the paintings. Very playful! I’ve scoured the web to find some video. This is Neil’s version of American Gothic:

Getting ready for the weekend with another piece from Neil Mendoza’s Mechanical Masterpieces, part of #ToughArt2018. pittsburghkids.org/exhibits/tough-art

Posted by Children's Museum of Pittsburgh on Friday, September 28, 2018

And here is his version of The Laughing Cavalier:

Check out Neil’s latest installation/music video.

My take: I love Click and I love interactive storytelling. But I’m not sure the BBC’s experiment was entirely successful. What I thought was missing was an Index, a way to quickly jump around their show. For instance, it was tortuous trying to find this item in the Tech News section. Of course, Click is in love with their material and expects viewers to patiently lap up every frame, even as they click to choose different paths through the material. But it’s documentary/news content, not narrative fiction, and I found myself wanting to jump ahead or abandon threads. On the other hand, my expectations of a narrative audience looking for A-B interactive entertainment is that they truly are motivated to explore various linear paths through the story. And an Index would reveal too much of what’s up ahead. But I wonder if that’s just me, as a creator, speaking. Perhaps interactive content is relegated to the hypertext/website side of things, versus stories that swallow you up as they twist and turn on their way to revealing their narratives.